(Credit: Elio Santos/Unsplash )

Virtual town sheds light on how our brains perceive places

By having people navigate a virtual town, researchers gained new insight into how our brains perceive places and help us navigate our environment.

The human brain uses three distinct systems to perceive our environment, according to new research.

There’s one system for recognizing a place, another for navigating through that place, and a third for navigating from one place to another.

For a new paper, the researchers designed experiments involving a simulated town and functional magnetic resonance imaging (fMRI) to gain new insights into these systems. Their results, which appear in the Proceedings of the National Academy of Sciences, have implications ranging from more precise guidance for neurosurgeons to better computer vision systems for self-driving cars.

“We’re mapping the functions of the brain’s cortex with respect to our ability to recognize and get around our world,” says senior author Daniel Dilks, associate professor of psychology at Emory University. “The PNAS paper provides the last big piece in the puzzle.”

Specialized systems

The experiments showed that the brain’s parahippocampal place area is involved in recognizing a particular kind of place in the virtual town, while the brain’s retrosplenial complex is involved in mentally mapping the locations of particular places in the town.

These new results follow a paper the researchers published last year showing that the brain’s occipital place area is involved in navigating through a particular place.

Before, some researchers theorized that all three of these cortical scene-selective regions—abbreviated as the PPA, RSC, and OPA—were involved generally in navigation. “Our work continues to provide strong evidence that this theory is just not true,” Dilks says.

“We’re showing that there are specialized systems in the brain that play different roles involved with recognizing places and navigating,” adds first author Andrew Persichetti, who did the work as an Emory graduate student in the Dilks lab. He has since received his PhD in psychology and is now a postdoctoral fellow at the National Institute of Mental Health.

Getting around a virtual town

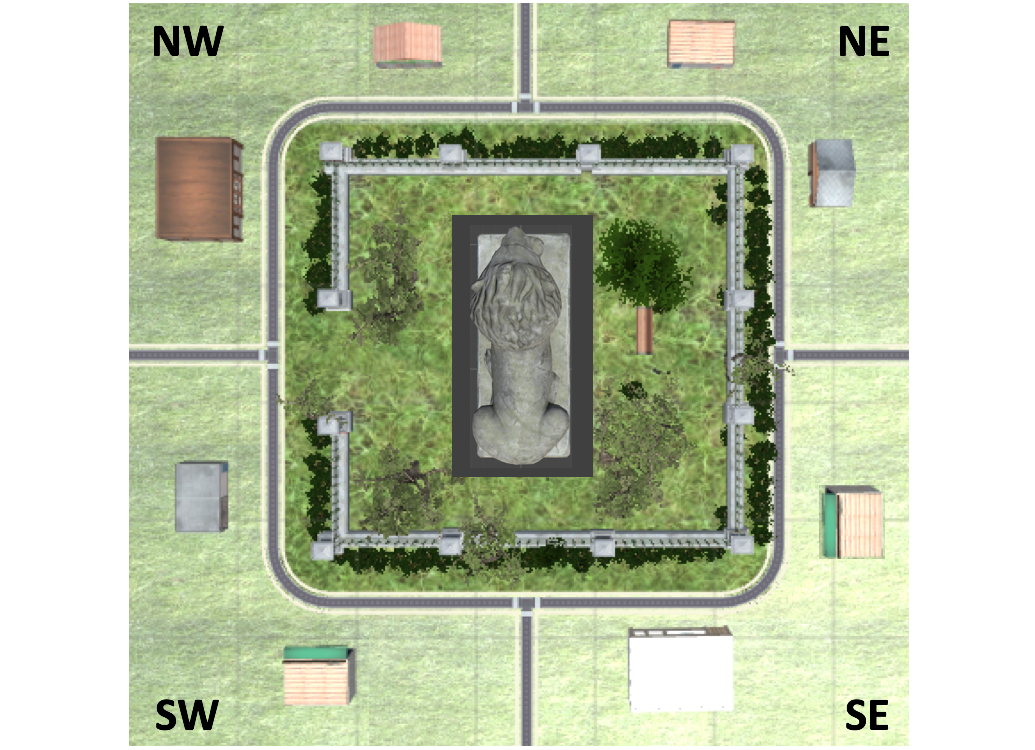

For the new paper, Persichetti developed experiments based on a virtual town he created, called “Neuralville,” and a simple video game.

“One of my favorite things about being a scientist is getting to design experiments,” he says. “It’s fun to try to come up with a clever, yet simple, way to answer a complex question.”

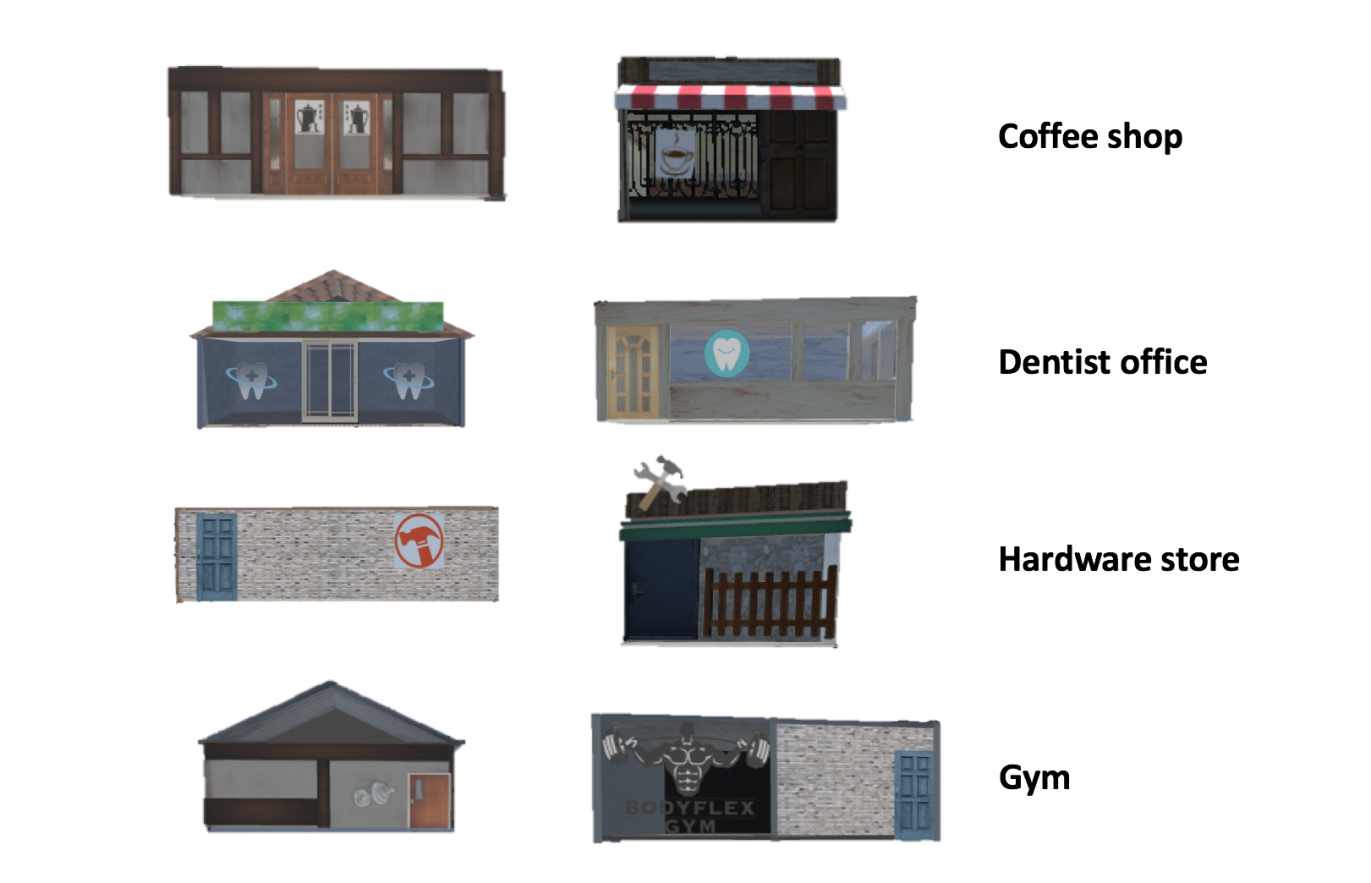

Neuralville consists of eight buildings, spread over four quadrants, or locations in the town, that surround a park. The categories of buildings are paired and include: Two coffee shops, two dentists’ offices, two hardware stores, and two gyms. Each quadrant contains two buildings, but no quadrant contains two of the same category. And, although they can be paired by categories, location, or facing direction, none of the eight buildings shares visual features, such as shape, size, or texture.

Participants sat at a computer and became familiar with the town as they “walked” around the four quadrants by pressing the arrow keys on the keypad. Researchers then dropped them into random locations of town and given tasks such as “Walk to Coffee Shop A.” After about 15 minutes of this practice, researchers showed them images of each building and asked what type it was and which quadrant of the town it was located in.

Once a participant scored 100% on this quiz, researchers put them into an fMRI scanner. As they scanned the participants’ brains, researchers gave them a picture of one of the buildings, such as Coffee Shop B, and asked a question. For example: “If you’re directly facing Coffee Shop B, would Dentist Office A be to your right or to your left?”

The participants repeated the task 10 times as the fMRI recorded activity in the PPA and the RSC. The researchers rendered the brain data in units called “voxels,” which are similar to pixels, except they are three-dimensional.

The results showed that the pattern of responses in the RSC were more similar for buildings that shared the same location than for buildings of the same category. The opposite was true for the pattern of responses in the PPA.

“These multi-voxel patterns reveal a spatial representation of these buildings in the RSC, and a categorical representation in PPA,” Persichetti says. “The RSC patterns basically puts them on a map in their proper locations, while the PPA pattern instead clusters them by their category.”

‘Tip of the iceberg’

Clinical applications for the work include better brain rehabilitation methods for those with problems related to scene recognition and navigation. The research may also prove beneficial to improving computer vision systems, such as those for self-driving cars.

“Through evolution, the brain has figured out the optimal solution to recognizing scenes and navigating around them,” Dilks says. “And that solution appears to be one of specializing the different functions involved.”

Dilks has been working to map how the visual cortex is functionally organized for years. In 2013, Dilks led the discovery of the OPA. In 2018, Dilks and Persichetti demonstrated that the OPA specializes in navigating through a particular place.

“While it’s incredible that we can show that different parts of the cortex are responsible for different functions, it’s only the tip of the iceberg,” Dilks says. “Now that we understand what these regions of the brain are doing we want to know precisely how they’re doing it and why’re they’re organized in this way.”

Funding for the research came from grants from the National Eye Institute and a National Science Foundation Graduate Research Fellowship.

Source: Emory University

The post Virtual town sheds light on how our brains perceive places appeared first on Futurity.

Share this article:

This article uses material from the Futurity article, and is licenced under a CC BY-SA 4.0 International License. Images, videos and audio are available under their respective licenses.

Related Articles:

‘Navigational goals’ help fruit flies move in a straight line

July 22, 2019 • futurityRodents navigate like Pacific Island sailors

Dec. 6, 2018 • futurityLinks/images:

- https://doi.org/10.1073/pnas.1903057116

- https://www.futurity.org/perception-environment-brain-1894262/

- https://www.futurity.org/brain-spatial-learning-1035552/

- https://news.emory.edu/features/2019/10/esc-navigating-neuralville/index.html

- https://www.futurity.org/brains-environment-navigation-systems-virtual-town-2179822/

- https://www.futurity.org