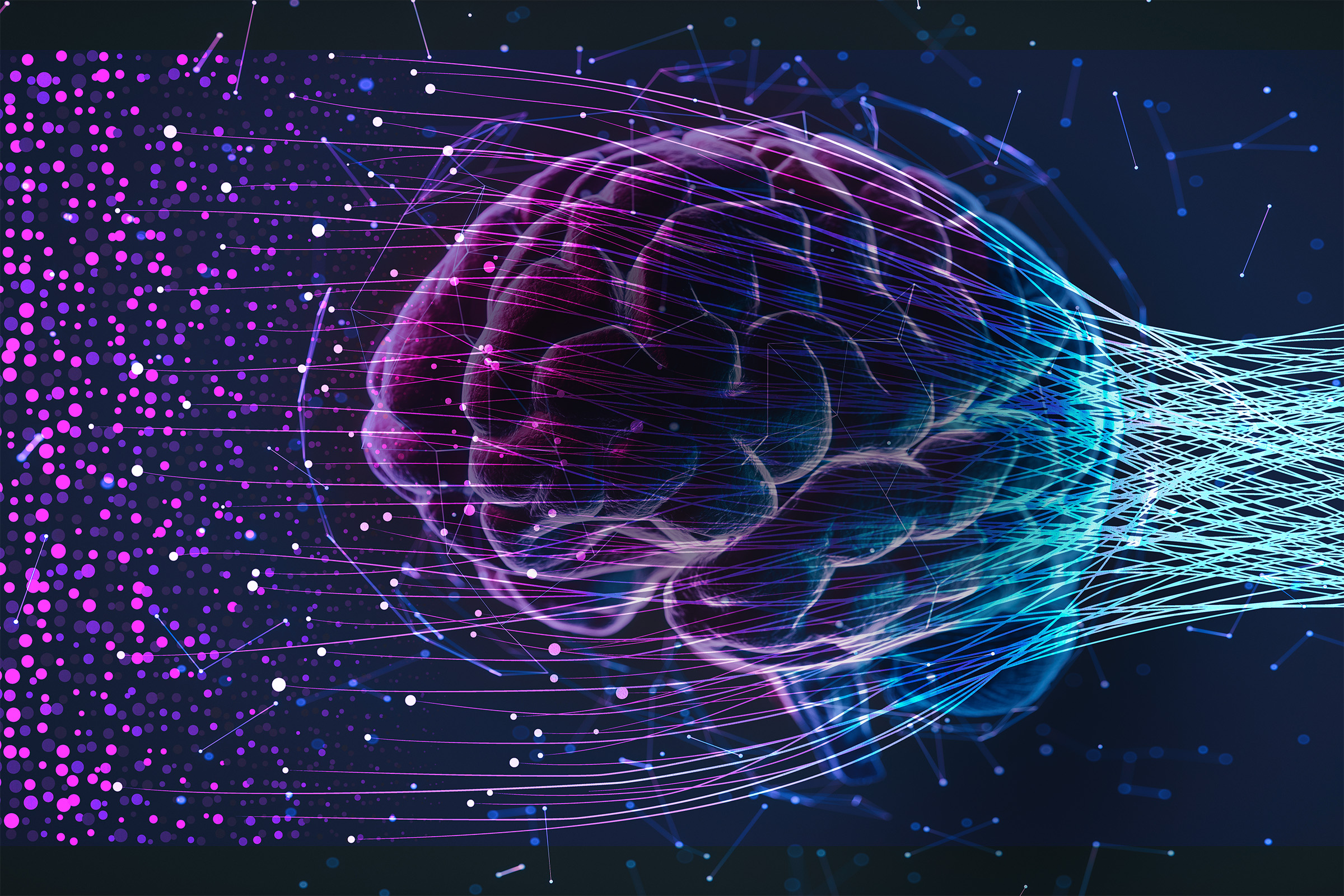

Understanding the visual knowledge of language models

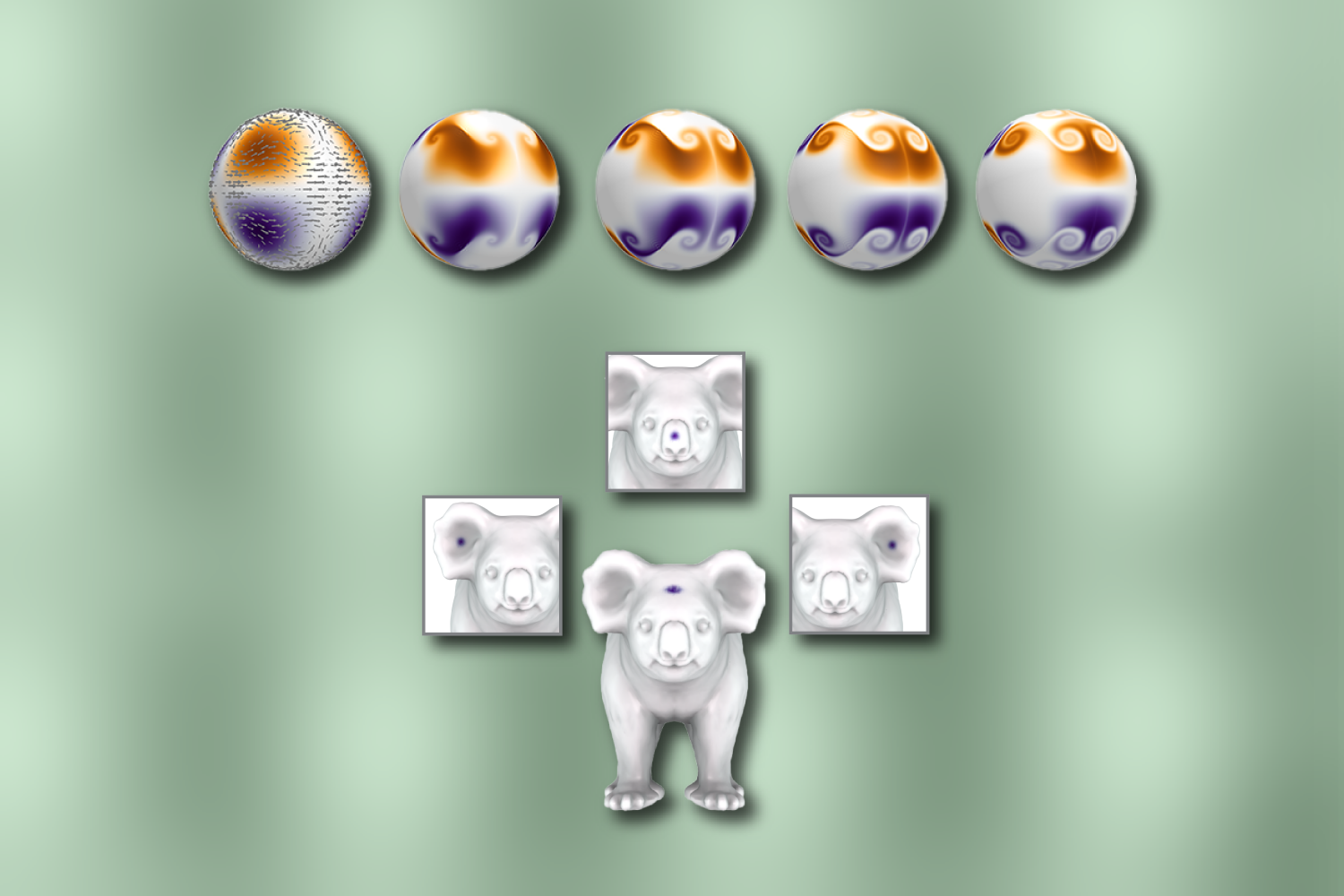

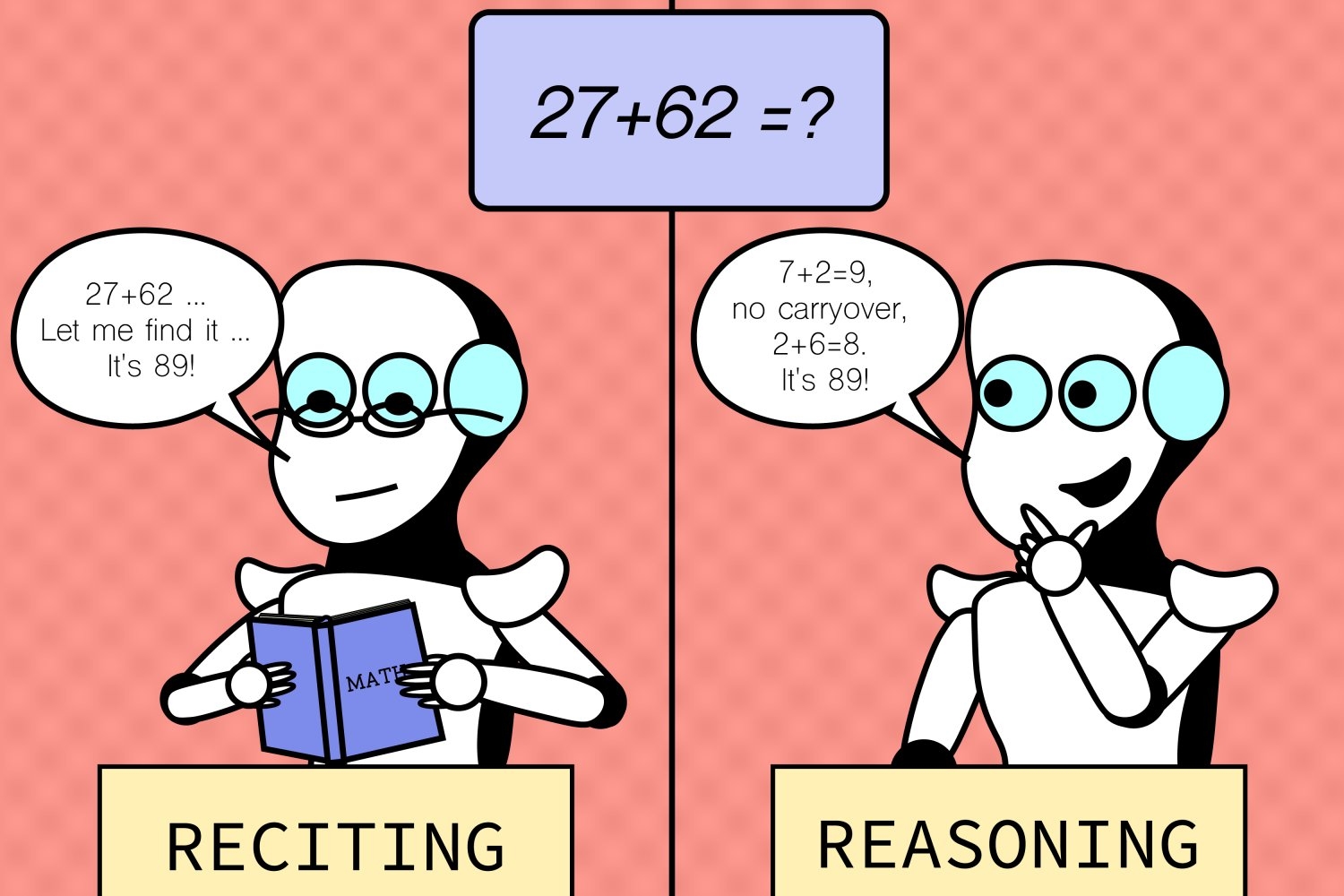

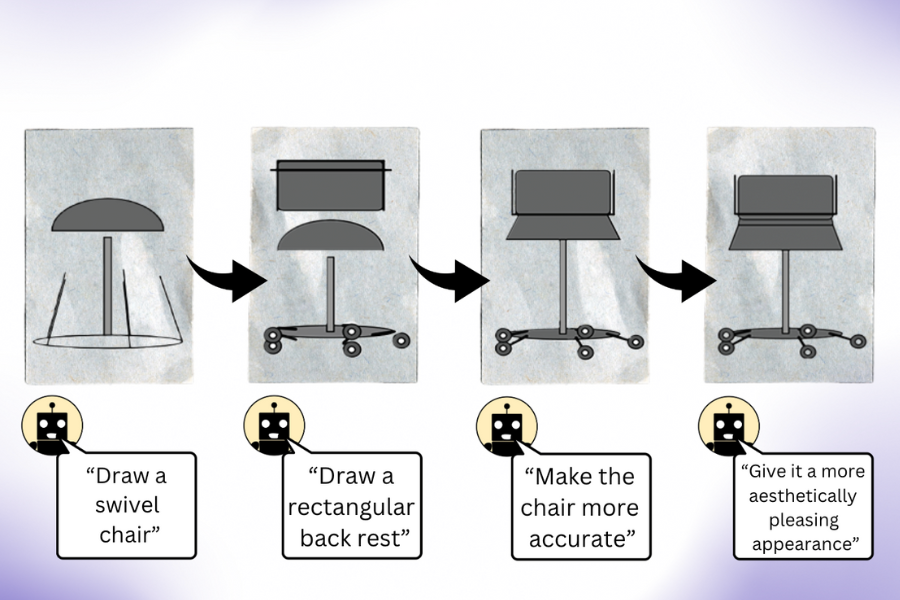

LLMs trained primarily on text can generate complex visual concepts through code with self-correction. Researchers used these illustrations to train an image-free computer vision system to recognize real photos.

Alex Shipps | MIT CSAIL •

mit

June 17, 2024 • ~6 min

June 17, 2024 • ~6 min

/

9