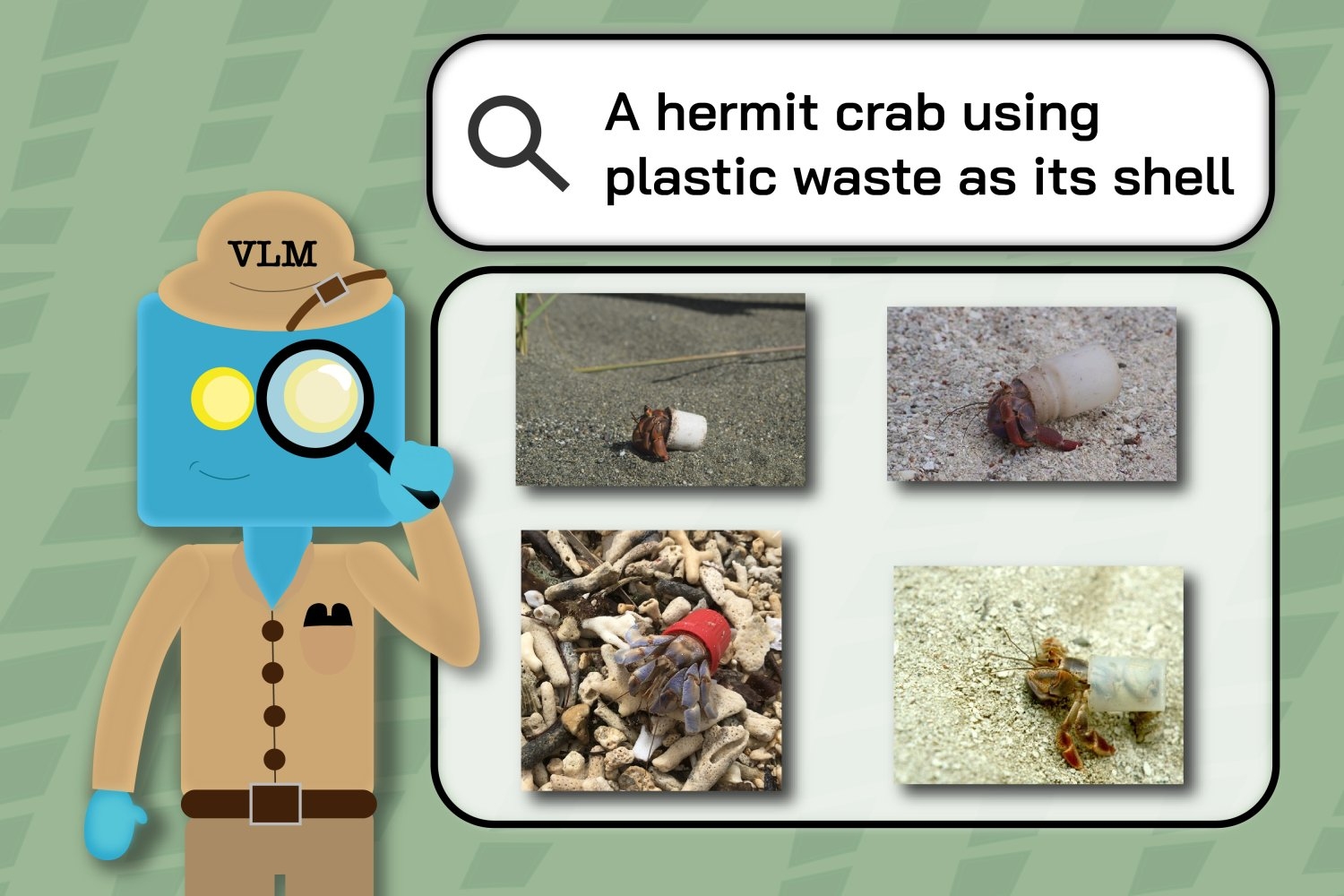

Ecologists find computer vision models’ blind spots in retrieving wildlife images

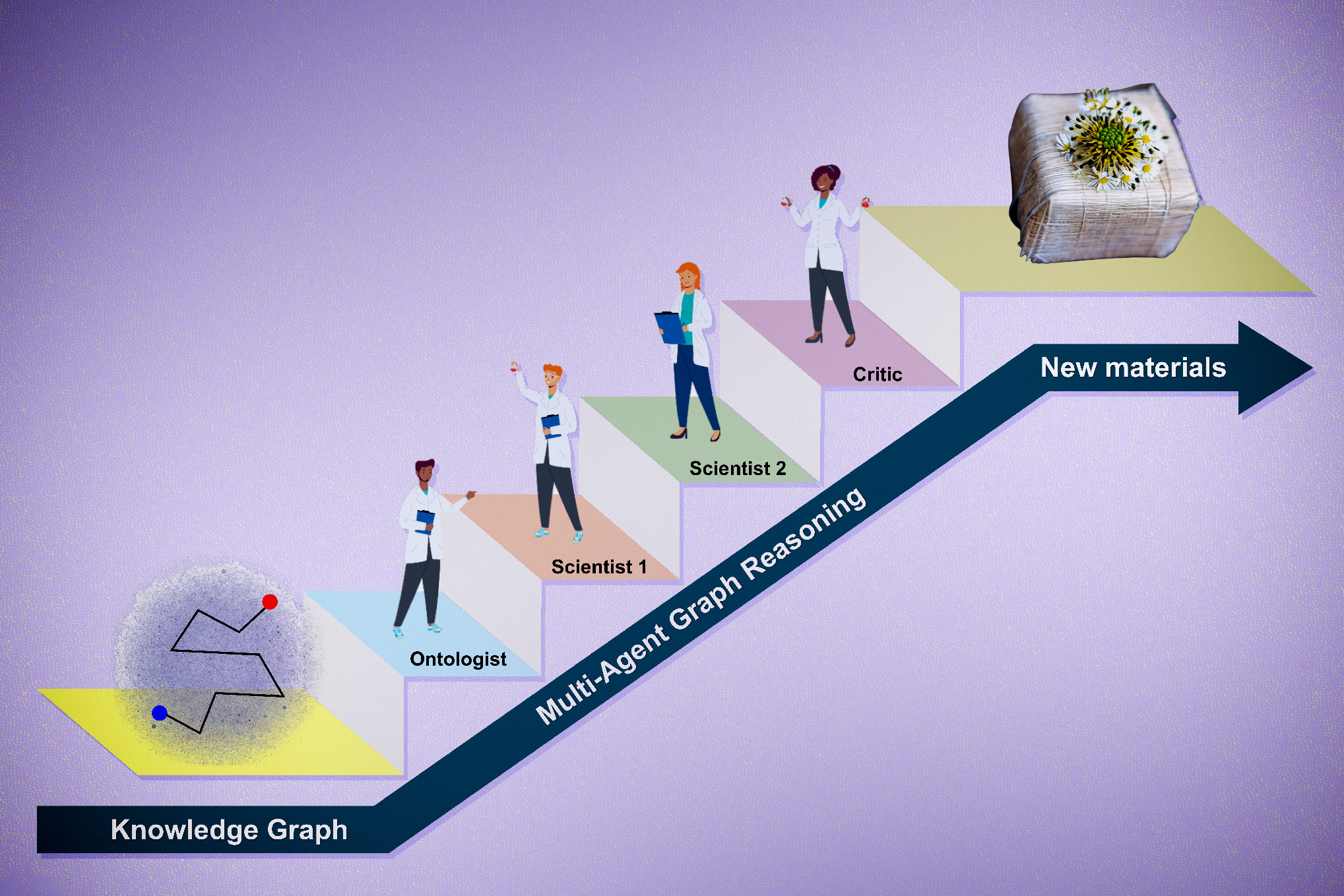

Biodiversity researchers tested vision systems on how well they could retrieve relevant nature images. More advanced models performed well on simple queries but struggled with more research-specific prompts.

Dec. 20, 2024 • ~9 min