Understanding the visual knowledge of language models

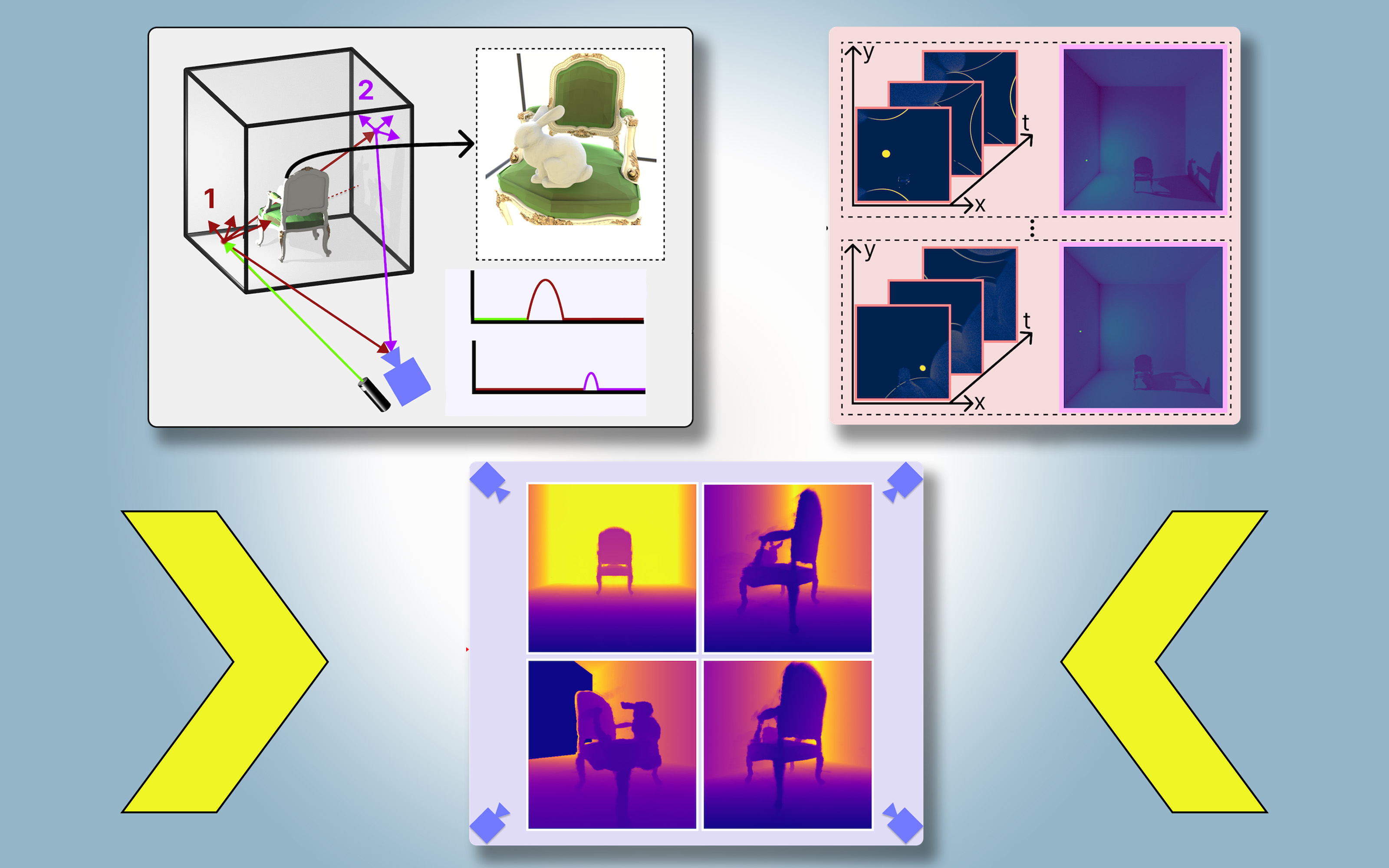

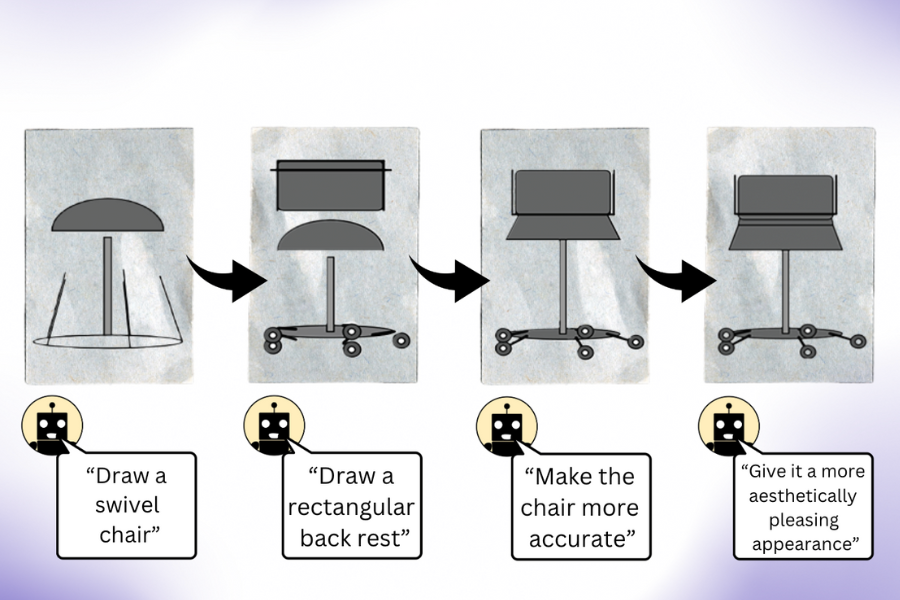

LLMs trained primarily on text can generate complex visual concepts through code with self-correction. Researchers used these illustrations to train an image-free computer vision system to recognize real photos.

Alex Shipps | MIT CSAIL •

mit

June 17, 2024 • ~6 min

June 17, 2024 • ~6 min

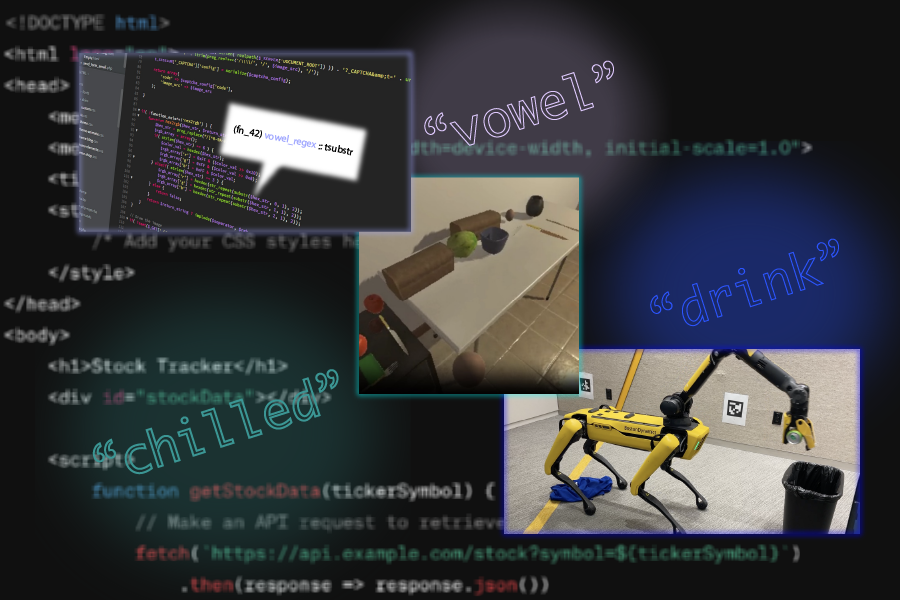

New algorithm discovers language just by watching videos

DenseAV, developed at MIT, learns to parse and understand the meaning of language just by watching videos of people talking, with potential applications in multimedia search, language learning, and robotics.

Rachel Gordon | MIT CSAIL •

mit

June 11, 2024 • ~9 min

June 11, 2024 • ~9 min

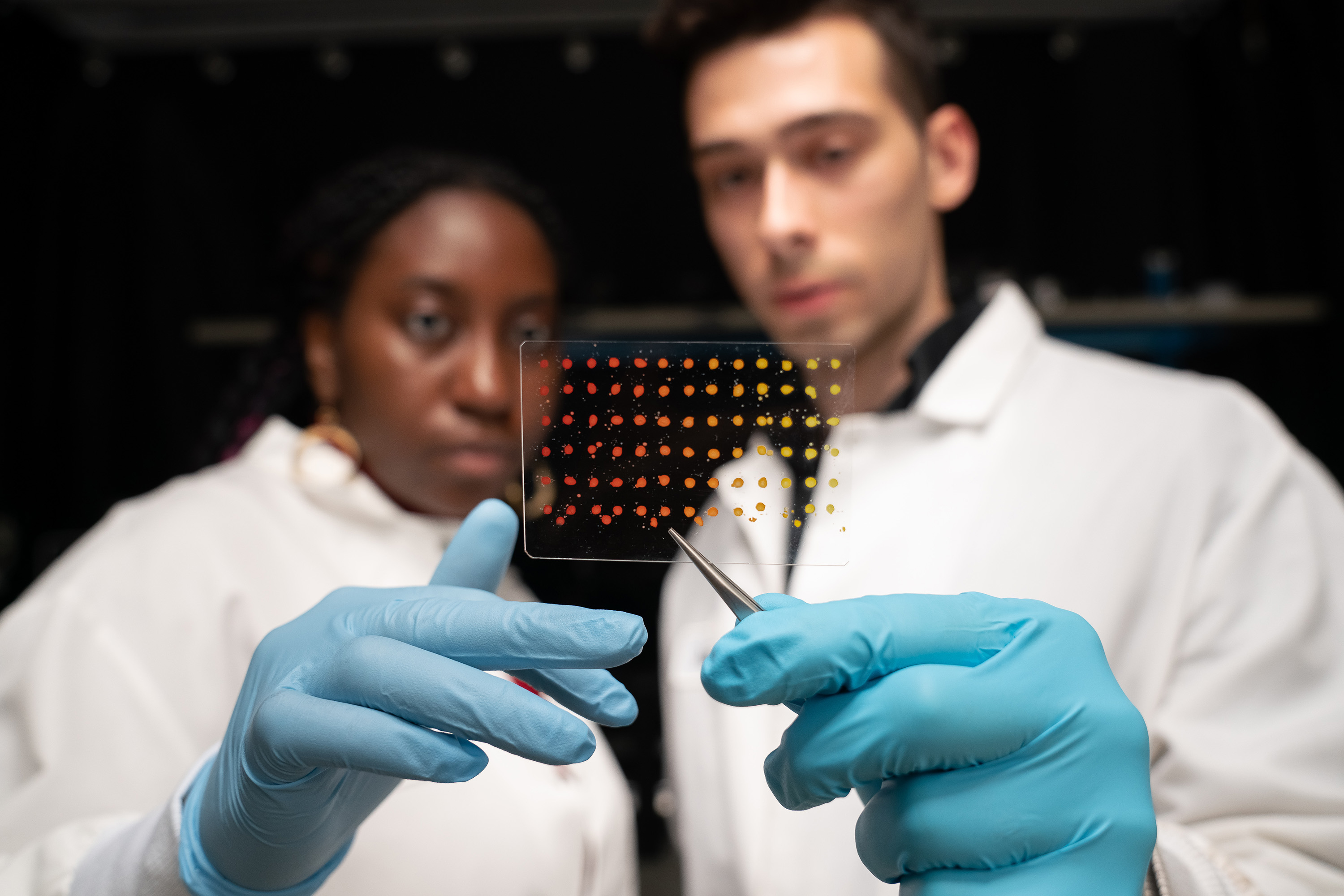

Controlled diffusion model can change material properties in images

“Alchemist” system adjusts the material attributes of specific objects within images to potentially modify video game models to fit different environments, fine-tune VFX, and diversify robotic training.

Alex Shipps | MIT CSAIL •

mit

May 28, 2024 • ~8 min

May 28, 2024 • ~8 min

/

14